Data prep and analysis are essentially specialized forms of programming. Any AI agents that work hand-in-hand with analysts must be specialized across four dimensions in order to offer data teams any notable gains in productivity and efficiency:

- Data context & semantics: Agents must understand universal data operations (types, quality rules, relationships, lineage) and your business-specific definitions to build correct workflows.

- Visual work surface: Analysts use visual workflows, SQL, spreadsheets, charts, and documents—for exploration, development, and regulatory write-ups. Agents must read, generate, and edit across these surfaces. This lowers the technical bar for simpler tasks (like vibe-coding has done for programming) and brings a step-change in productivity for medium-to-larger tasks (like Cursor has done for programming).

- Specialized execution: Workflows span connectors (e.g., read from SharePoint; write to Tableau/Power BI), in-memory execution for ad hoc tasks, and cloud data platforms like Databricks and Snowflake for scale. Agents must execute across all three.

- Enterprise governance: Every step must respect access controls, lineage, auditability, and best practices—so work scales with reliability and compliance.

In short, agents are only particularly useful when they’re designed to seamlessly work alongside data professionals and their processes.

The AI-driven data lifecycle

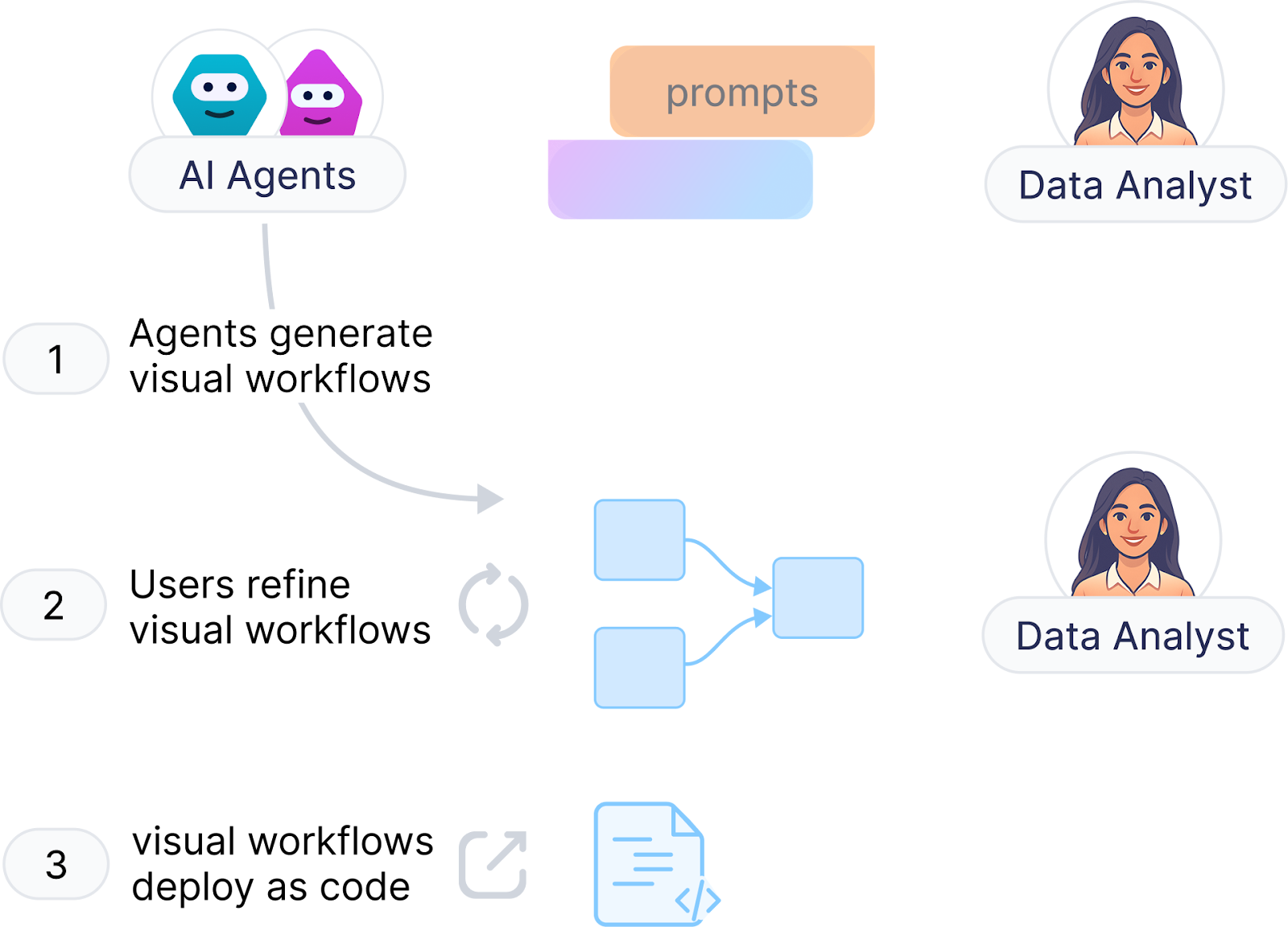

As AI generates more of the business logic through the visual work surface and natural language prompting, the job shifts to rapidly inspecting & refining what the agents produce, so that the final results are trusted. The lifecycle is simple:

- Generate: Agents interpret the business prompt in the context of schemas, metadata, and prior work. They produce results that are easy to review as visual workflows (and documents)

- Refine: Users quickly inspect and edit in a visual interface, previewing data after each step and making changes to steps or adding new ones: iterating until the result is correct

- Deploy: Designs move from interactive runs (sample or full data) to scheduled, governed production on the central data platform with platform best practices including versioning, tests, approvals, CI/CD, monitoring, and alerting

Agent types needed for robust data preparation

The future of data preparation is centered on multiple specialized agents that are all capable of performing critical steps of the process alongside analysts. Enabling analysts reduces the backlog of requests to engineering (allowing engineers to focus on work that requires their unique skillset) and ensures the business context analysts’ possess is implemented directly into the process of data preparation and analysis.

Let’s look at a few types of agents required to achieve this vision of analyst-led data prep:

- Discover & Transform agent: Helps users find relevant datasets, ingest them, and transform (cleanse, normalize, enrich, aggregate) them for insights. These agents bring the full power of coding agents specialized for data context to the visual layer, providing industry-leading results.

- Document agent: Helps users document the data transformations applied to datasets for regulated industries such as pharma, accounting, or finance. The agent understands the data operations and the target template, produces objective sections, and walks the user through answering questions where subjective judgement is required.

- Harmonize agent: Helps data users onboard datasets from various customers and sources into a common data format so downstream transforms and processing can be done uniformly. It uses example workflows, mapping documents, and definitions of the target format including relationships and constraints to produce high quality results.

- Requirement agent: Helps generate data workflows from requirements documents and then keeps the requirement documents up to date as the workflows change.

How Prophecy implements this lifecycle:

Prophecy has taken up the challenge of implementing these agentic capabilities alongside robust governance and execution features in a platform that sits directly on your cloud data platform, challenging the status quo of desktop-focused tools like Alteryx.

- Specialized agents understand prompts and data context (schemas, types, lineage, quality rules) to generate accurate transforms, tests, and documentation.

- Native visual + document interfaces that are deeply integrated with agents make it easy for the results to be reviewed fast with human-in-the-loop processes.

- Enterprise execution & operations with connectors, orchestration, and observability is built-in. The visual transformations turn to code that executes natively on Databricks, Snowflake, or BigQuery, with best practices and guardrails defined by platform teams.

You can learn more about the Prophecy platform and how it compares to tools like Alteryx here.

Ready to see Prophecy in action?

Request a demo and we’ll walk you through how Prophecy’s AI-powered visual data pipelines and high-quality open source code empowers everyone to speed data transformation